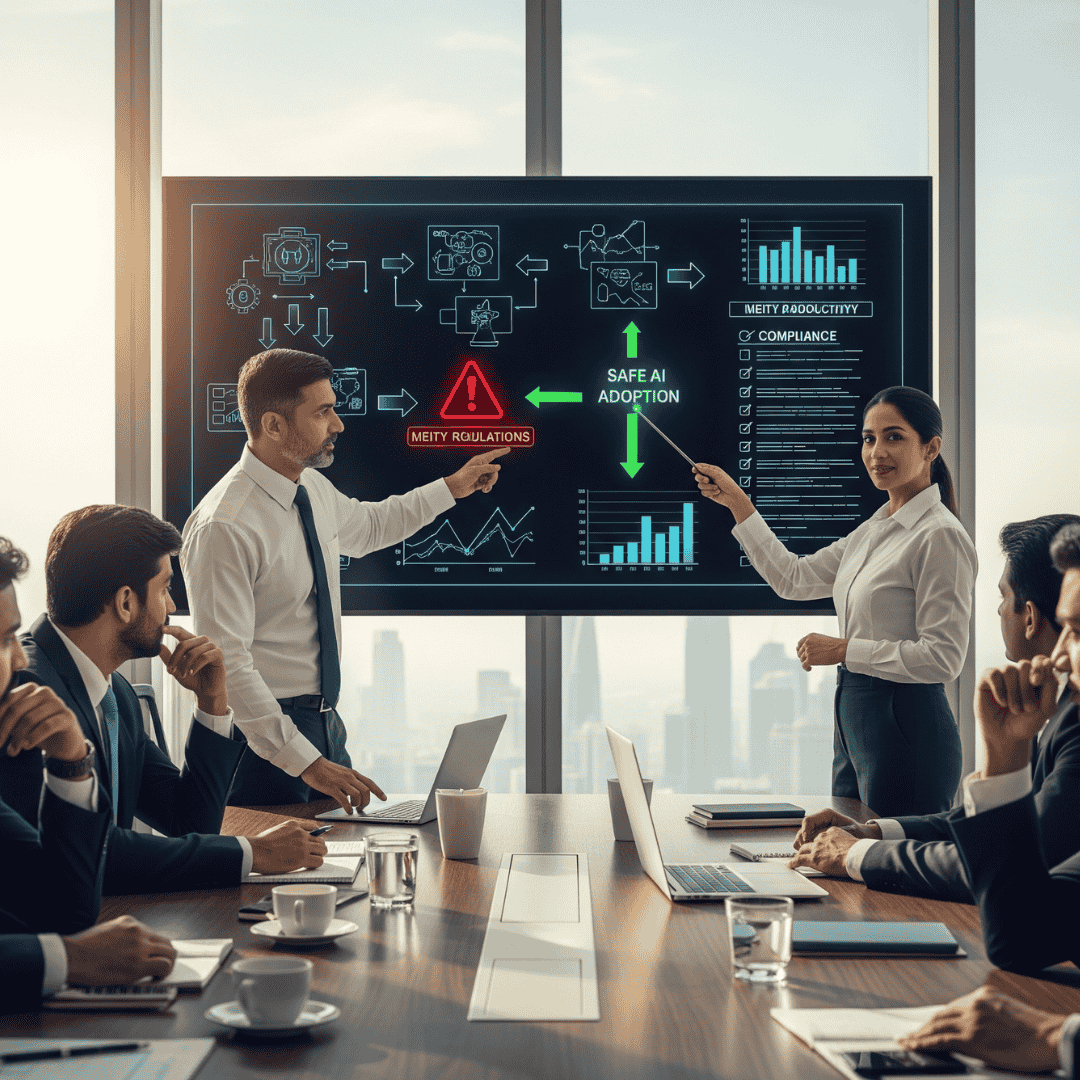

You want AI to lift output and cut costs. Your board wants speed. Your teams want relief from routine work. Good. Here is the part that should make you uneasy: the rules are getting tighter, deepfakes are rising, and sloppy AI use can expose your data, your customers, and your brand. If you treat AI like a shiny shortcut, it will bite hard.

You have seen the headlines. Fake videos that look real. Chat tools that leak prompts. Models that copy licenses they should not. One wrong move and legal, financial, and reputational damage follows. Yet the pressure to “do AI now” keeps growing. So how do you ship real value without stepping on a legal landmine?

What Most Firms Get Wrong

Most rollouts jump straight to pilots and skip governance. They copy prompts from the internet, push real data into public tools, and hope for the best. Procurement signs platform contracts without a risk map. HR writes a one‑page policy that nobody reads. Security teams get involved only after a scare. This is not adoption. This is gambling.

Blueprint for Safe and Useful AI

This is a practical, no‑nonsense path that keeps you compliant and productive. Follow it in order. Keep it boring. That is how you win.

1. Use cases first, tools second

Pick three use cases with clear value and low risk. Examples: draft proposals from approved templates, summarise internal reports, classify customer tickets. Avoid anything that touches sensitive data until controls are in place. Define the expected output, the quality bar, and the success metric.

2. Create a clean data lane

Separate data into three buckets: public, internal, restricted. Public can go into approved external models. Internal stays within your tenant. Restricted never leaves secure stores. Mask PII. Strip secrets. Log every transfer. If a prompt needs sensitive inputs, stop and redesign the flow.

3. Choose platforms with guardrails

Favour enterprise versions with audit logs, encryption at rest and in transit, regional data control, tenant isolation, and configurable retention. Turn off training on your prompts and outputs by default. Require SSO, MFA, and role based access. Keep a short vendor list so your attack surface stays small.

4. Write a policy people can use

Four pages, plain language, zero fluff. What data can be used, where, and by whom. What must be reviewed by a human. What must never be pasted into any model. What to do if something goes wrong. Train on real examples. Test understanding. Make it part of onboarding.

5. Put humans in the loop

For any AI output that changes money, identity, or reputation, you need a human check. Define who reviews, how they review, and what gets archived. Keep a sample of prompts, outputs, and approvals for audit. Track accuracy, bias flags, and error rates.

6. Build an approval gate

No team spins up a new AI tool without a short form that covers data types, security needs, and business value. Security signs off. Legal signs off. Procurement signs off. Fast path for low risk. Slower path for anything touching sensitive or regulated data.

7. Control prompts like code

Store prompts in a repo. Version them. Review changes. Tag each prompt with the use case, risk level, and owner. Ban copy‑paste prompt chains from random sources. If a prompt produces unstable results, it does not go to production.

8. Keep a record of everything that matters

Log who used what model, with which prompt pattern, and what output was accepted or edited. Save evidence of human oversight. Record incidents and fixes. If a regulator asks, you can show your homework.

9. Plan for the ugly day

Assume someone will paste a secret. Assume someone will share a fake video. Write a simple playbook: detect, contain, report, fix, learn. Name the people on call. Practice once a quarter. Speed beats panic.

10. Prove value in weeks, not years

Publish a small scorecard every month. Hours saved, errors reduced, time to draft, time to resolve. Tie it to money saved or revenue earned. AI that cannot prove value should not survive budget review.

Eye‑Opening Reality Check

AI will not replace your team. Teams that use AI well will beat teams that do not. The gap is already visible. Firms with clear guardrails and focused use cases ship faster content, cleaner support, and sharper insights. They avoid fines, keep trust, and still move fast. Firms that rush in without discipline produce noisy drafts, leak private notes, and then spend weeks cleaning the mess.

What Good Looks Like in 90 Days

Week 1 to 2: shortlist use cases, map data, pick one platform, draft policy, train champions.

Week 3 to 6: run pilots on internal documents, set up logging, define review steps, track quality.

Week 7 to 10: broaden to customer facing drafts with human checks, integrate with your systems.

Week 11 to 12: publish the scorecard, kill weak use cases, double down on what works.

This plan is simple on purpose. It reduces risk while delivering real output. No drama. No jargon. Just focus and control.

Where Compliance Meets Common Sense

Deepfakes, content take‑downs, consent, model transparency, and audit trails are not abstract ideas. They shape how you deploy AI at work. Treat them as part of daily operations. Keep proof of decisions. Respect data ownership. Review your stack when rules change. That is how you stay safe and still get the gains.

The Panthak Edge

We do not chase hype. We design adoption that survives audits and board reviews. We cut noise, build the process, select the right platforms, and leave your teams with habits that stick. You get speed with safety and results you can measure.

Ready to adopt AI without risking your brand?

Tell us where you want impact. We will map safe use cases, set up guardrails, and have your first wins live in weeks. Fill the quick form below and our team will reach out with a focused plan.